Workflows with DAGMan

Learn more at : https://htcondor.readthedocs.io/en/feature/users-manual/dagman-workflows.html

DAGMan (Directed Acyclic Graph Manager) is a HTCondor tool that allows multiple jobs to be organized in workflows. This is particularly useful to submit jobs in a particular order, automatically and especially if there is a need to reproduce the same workflow multiples times.

DAGMan submits jobs to HTCondor and is responsible for scheduling, managing dependencies between jobs, and reporting them.

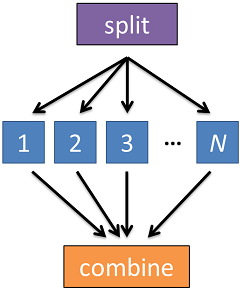

Simple workflow example

Describing Workflows as directed acyclic graphs (DAGs)

A workflow is represented by a DAG (Directed Acyclic Graph) composed of a set of nodes and their dependencies (as Parents-Children) and described by a DAG input file. “Acyclic” aspect requires a start and end, with no loops (i.e. “cycles) in the graph.

A node is a unit of work which contains an HTCondor job and optional PRE and POST scripts than run before and after the job. Dependencies between nodes are described directional connections; each connection has a parent and a child, where the parent node must finish running before the child starts. Any node can have an unlimited number of parents and children.

Basic DAG input file: JOB nodes, PARENT-CHILD directional connections

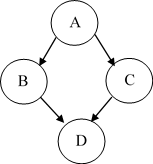

User must communicate the nodes and directional connections in the DAG input file. A simple diamond-shaped DAG, as shown in the following image is presented as a starting point for examples. This DAG contains 4 nodes.

A very simple DAG input file for this diamond-shaped DAG is:

# File name : Test.dag

JOB A A.sub

JOB B B.sub

JOB C C.sub

JOB D D.sub

PARENT A CHILD B C

PARENT B C CHILD D

The scripts A.sub, B.sub, C.sub, D.sub are simple submit files for the four nodes A, B, C, D.

An alternative specification for the diamond-shaped DAG may specify some or all of the dependencies on separate lines:

PARENT A CHILD B C

PARENT B CHILD D

PARENT C CHILD D

Node Job Submit File Contents

Each node in a DAG may use a unique submit description file. DAGMan cannot deal with a submit description file producing multiple job clusters.

As a first try, one can reproduce the following example. A ‘bag’ of 10 DAGs will be submitted.

• A.submit

executable=/usr/bin/echo

universe=vanilla

arguments="Test A.$(Process)"

output=outA.$(Process)

error=errA.$(Process)

log=results.log

notification=never

queue 10

• B.submit

executable=test_dag.sh

universe=vanilla

arguments = B outA.$(Process)

output=test_dagB.$(Process)

error=errB.$(Process)

transfer_input_files=outA.$(Process)

log=results.log

request_cpus = 1

request_memory = 1024

notification=never

queue 10

• C.submit

executable=test_dag.sh

universe=vanilla

arguments = C outA.$(Process)

output=test_dagC.$(Process)

error=errC.$(Process)

transfer_input_files=outA.$(Process)

log=results.log

request_cpus = 1

request_memory = 1024

notification=never

queue 10

• D.submit

executable=/usr/bin/cat

universe=vanilla

arguments = test_dagB.$(Process) test_dagC.$(Process)

output=outD.$(Process)

error=errD.$(Process)

transfer_input_files = test_dagB.$(Process) test_dagC.$(Process)

log=results.log

request_cpus = 1

request_memory = 1024

notification=never

queue 10

• test_dag.sh

#!/bin/sh

ret=$(/usr/bin/cat $2)

/usr/bin/echo Transfom $1 on $ret

Submitting and monitoring a DAG

Use the condor_submit_dag submission command. A submitted DAG creates a DAGMan job process in the queue.

$ cd /mustfs/LAPP-DATA/calcul/alice/HT-CONDOR/DAG

$ condor_submit_dag test.dag

--------------------------------------------------------------------

File for submitting this DAG to HTCondor : test.dag.condor.sub

Log of DAGMan debugging messages : test.dag.dagman.out

Log of HTCondor library output : test.dag.lib.out

Log of HTCondor library error messages : test.dag.lib.err

Log of the life of condor_dagman itself : test.dag.dagman.log

Submitting job(s).

1 job(s) submitted to cluster 38317.

--------------------------------------------------------------------

DAGMan runs as a job in the queue. Seconds later, 10 nodes A are there, idle or running. Jobs have been automatically submitted by the DAGMan job. After A completes, B and C will be submitted, then D after B and C completed.

$ condor_q -nobatch

-- Schedd: host.in2p3.fr : <134.158.x.x:9618?... @ 03/07/22 16:51:05

ID OWNER SUBMITTED RUN_TIME ST PRI SIZE CMD

38317.0 alice 3/7 16:51 0+00:00:02 R 0 0.3 condor_dagman -p 0 -f -l . -Lockfile test.dag.lock -AutoRescue 1 -DoRescueFrom

38320.0 alice 3/7 16:51 0+00:00:00 I 0 0.0 echo Test A.0

38320.1 alice 3/7 16:51 0+00:00:00 R 0 0.0 echo Test A.1

38320.2 alice 3/7 16:51 0+00:00:00 R 0 0.0 echo Test A.2

38320.3 alice 3/7 16:51 0+00:00:00 R 0 0.0 echo Test A.3

38320.4 alice 3/7 16:51 0+00:00:00 R 0 0.0 echo Test A.4

38320.5 alice 3/7 16:51 0+00:00:00 R 0 0.0 echo Test A.5

38320.6 alice 3/7 16:51 0+00:00:00 I 0 0.0 echo Test A.6

38320.7 alice 3/7 16:51 0+00:00:00 I 0 0.0 echo Test A.7

38320.8 alice 3/7 16:51 0+00:00:00 I 0 0.0 echo Test A.8

38320.9 alice 3/7 16:51 0+00:00:00 I 0 0.0 echo Test A.9

Total for alice: 10 jobs; 0 completed, 0 removed, 5 idle, 5 running, 0 held, 0 suspended.

Several status files are created by the condor_dagman job process :

- *.condor.sub and *.dagman.log describe the queued DAGMan job process, as for all queued jobs

- *.dagman.out has detailed logging (look to first for errors)

- *.lib.err/out contain std err/out for the DAGMan job process

- *.nodes.log is a combined log of all jobs within the DAG

So you may have to use the options –f or –update_submit in case those files already exist.

On DAG completion, the following files will be available :

- *.metrics is a summary of events and outcomes

- *.nodes.log will note the completion of the DAGMan job

Removing a DAG

Remove the DAGMan job in order to stop and remove the entire DAG:

$ condor_rm <dagman_jobID>

Removing a DAG results in a rescue file dag_file.rescue001.

A rescue file is created any time a DAG is removed from the queue by the user (condor_rm) or automatically in case:

- a node fails, and after DAGMan advances through any other possible nodes,

- the DAG is aborted,

- the DAG is halted and not unhalted.

Default File Organization

condor_dagman assumes that all relative paths in a DAG input file and the associated HTCondor submit description files are relative to the current working directory when condor_submit_dag is run. This works well for submitting a single DAG. It presents problems when multiple independent DAGs are submitted with a single invocation of condor_submit_dag. Each of these independent DAGs would logically be in its own directory, such that it could be run or tested independent of other DAGs. Thus, all references to files will be designed to be relative to the DAG’s own directory.

Two possibilities exist to specify the file locations ; do not use both at the same time (!) :

- use the

condor_submit_dagcommand with option--UseDagDir - use the directive DIR in the DAG input file to specify the location of the submit files

Learn more at : https://htcondor.readthedocs.io/en/feature/users-manual/dagman-workflows.html#file-paths-in-dags